Remote Backends with S3

Terraform state files are just JSON data structures that maps real world infrastructure resources to the resource definition in configuration files. A state file can be considered to be a blueprint of all the resources that Terraform manages.

In a real world scenario, a Terraform configuration may contain numerous resources belonging to several different providers such as AWS, Azure, or GCP. Irrespective of the size of the infrastructure, Terraform will always create a state file and use it to keep track of the state of the provisioned infrastructure.

Note: By default, the Terraform state file terraform.tfstate is stored locally in the configuration directory. This does not provide a good opportunity to collaborate with team members as the state file is stored on a specific user's machine.

Since state files may contain sensitive information about our provisioned infrastructure, it's not ideal to store it in a version control system such GitHub, GitLab or BitBucket. It's a much better option to store Terraform state in a secured shared storage by making use of Remote Backends

Solution Overview

We will configure a remote backend which will allow Terraform to automatically load a state file from the shared storage, every time it is required by a Terraform operation. It will also upload the updated Terraform state file each time we run the terraform apply command.

Prerequisite

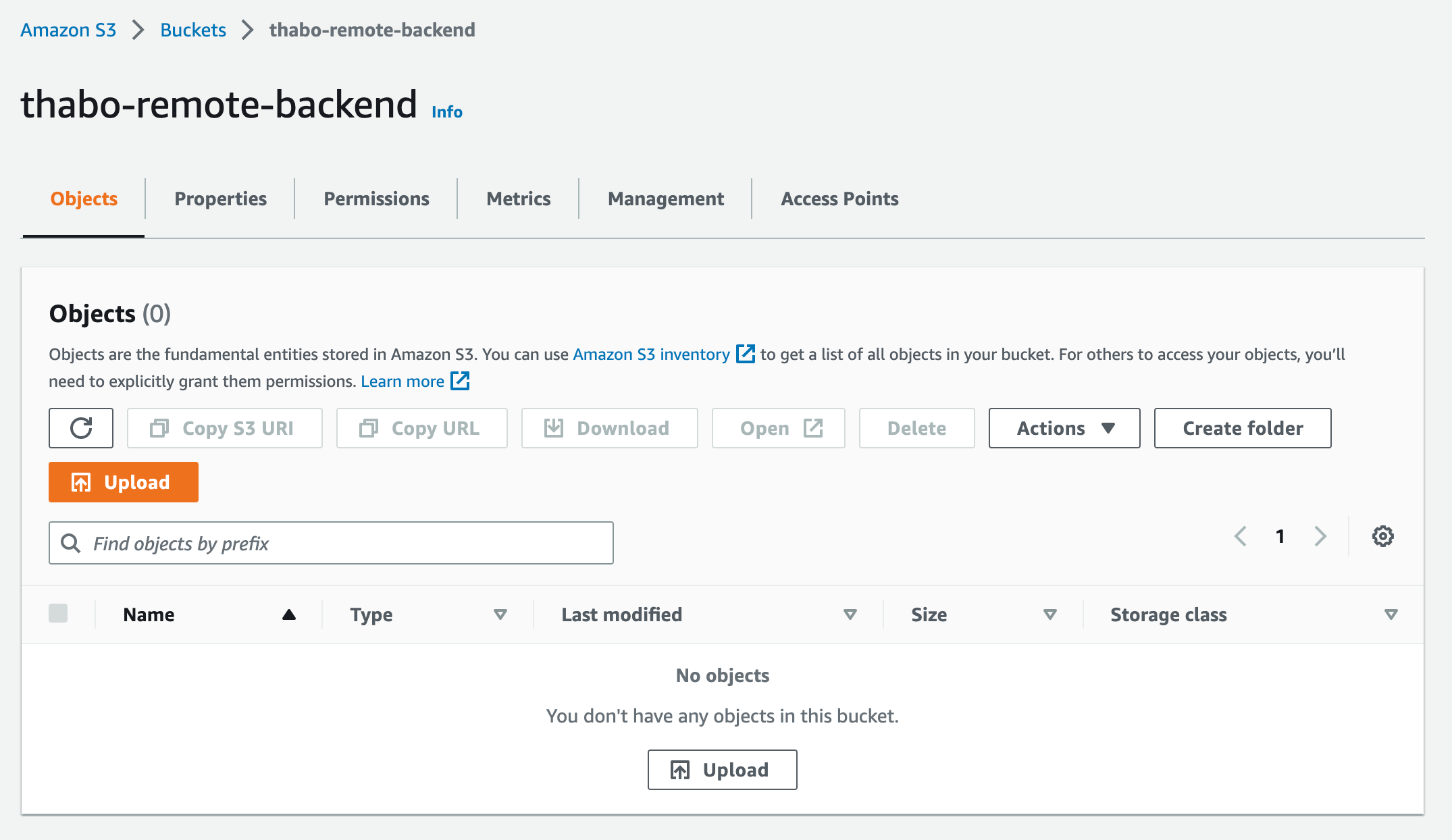

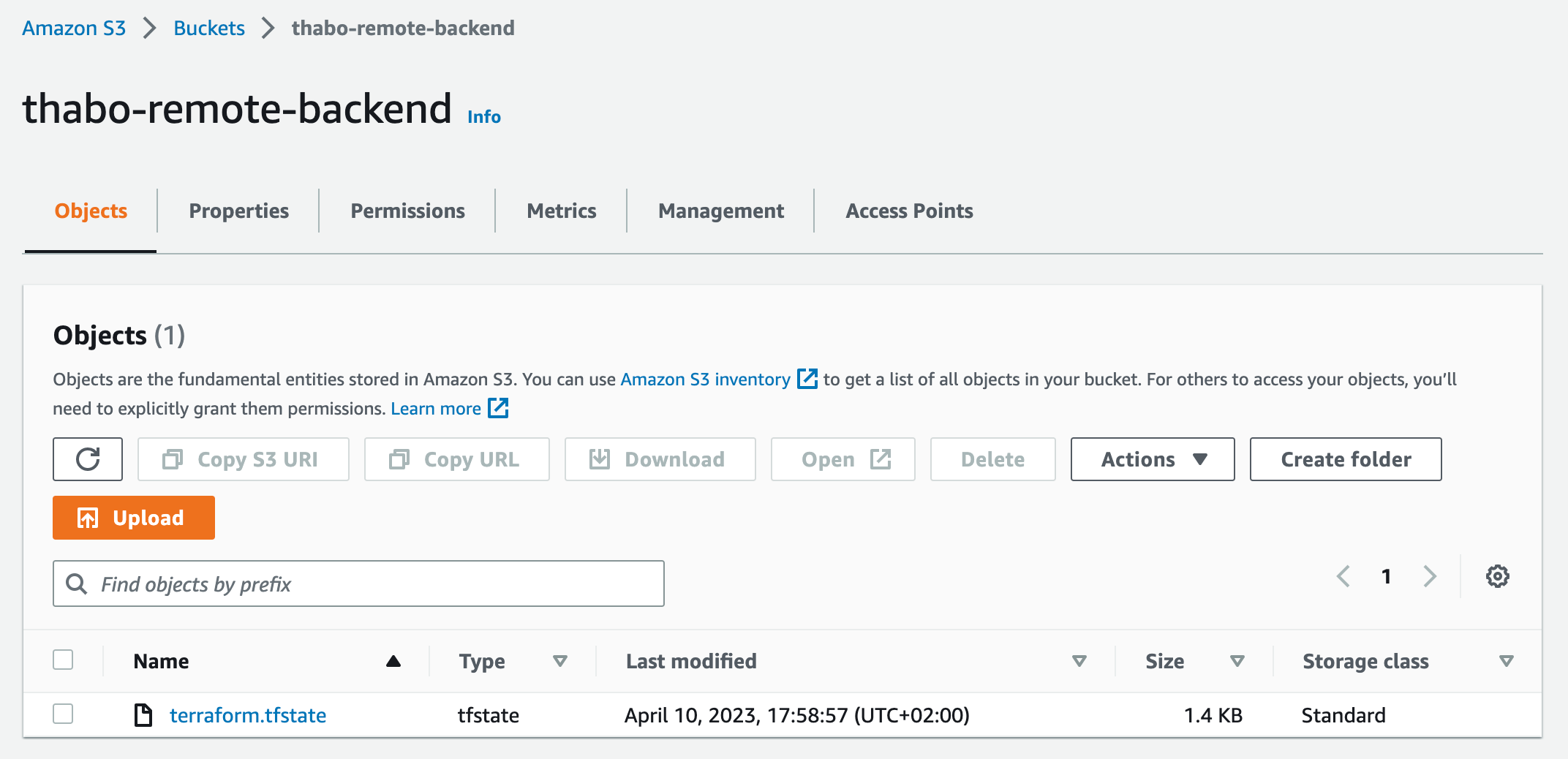

1.We need to make use of an S3 Bucket which will be used to store the Terraform state file:

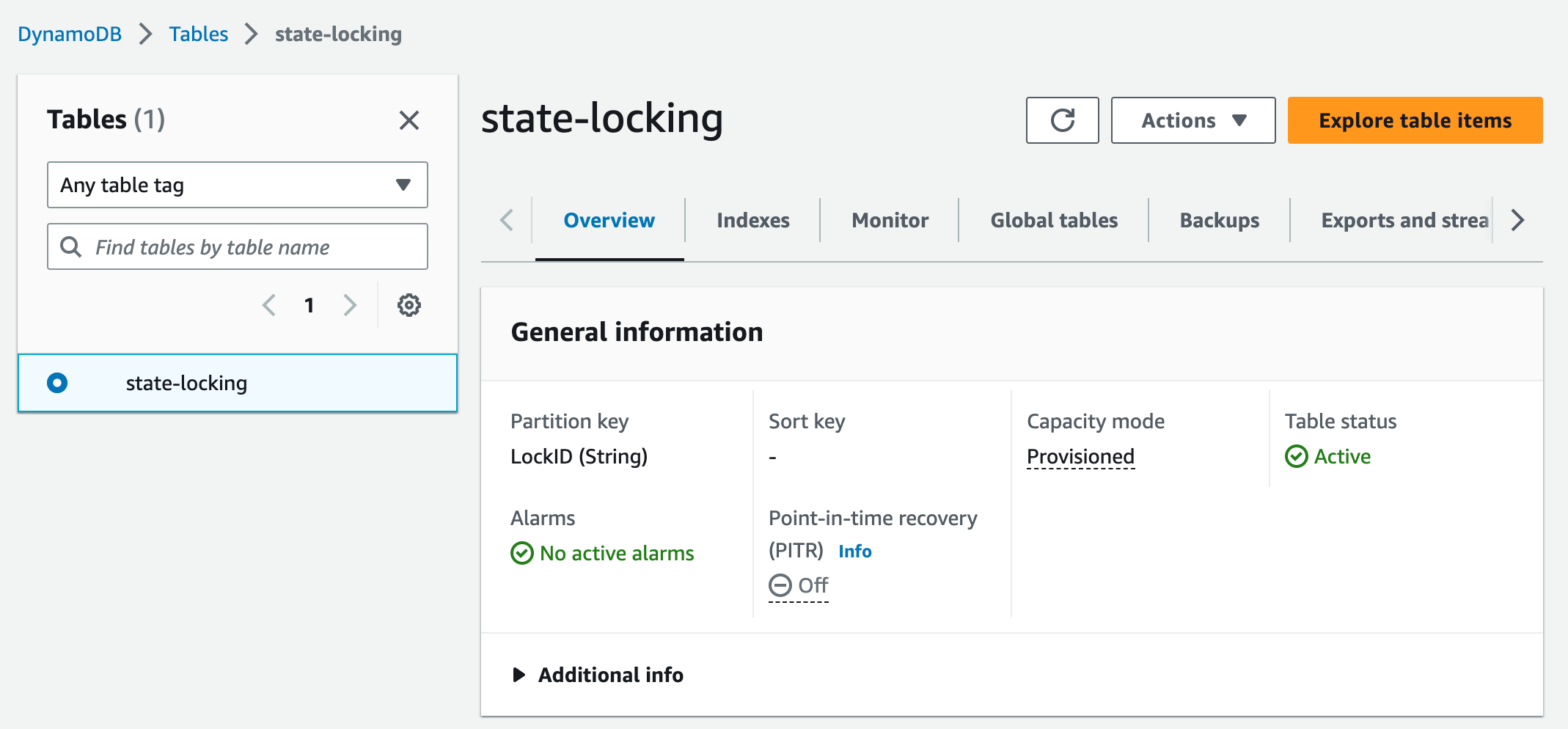

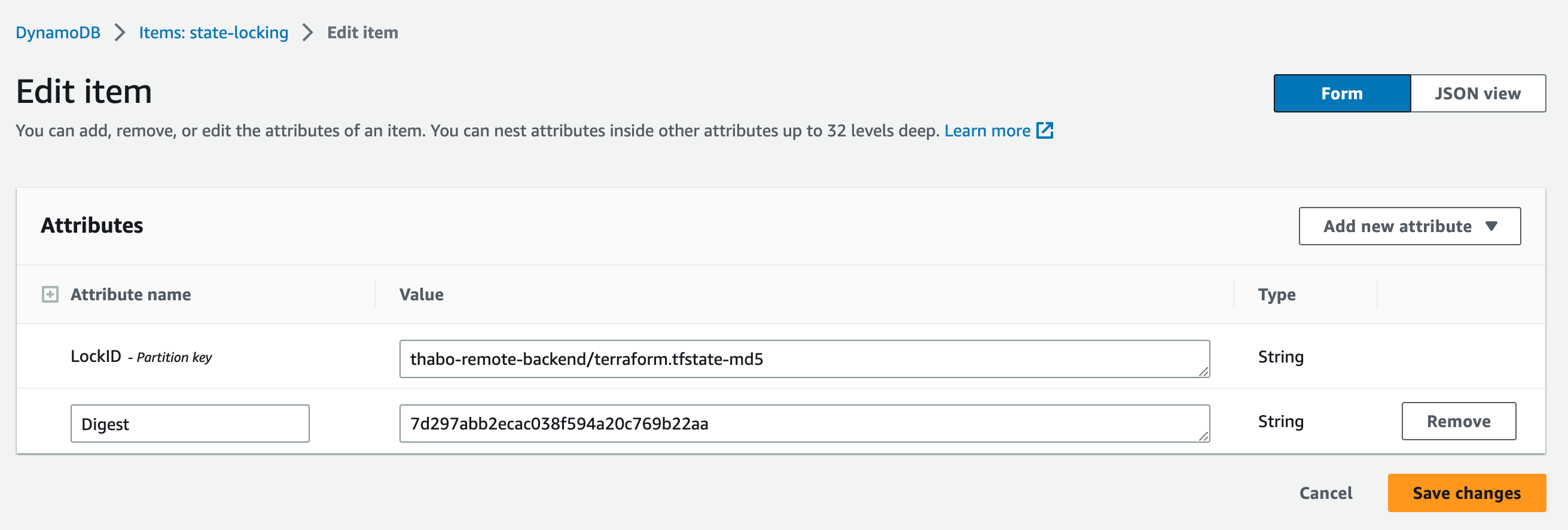

2. DynamoDB Table which will be used to implement state locking and consistency checks:

Step 1: Provision a Resource

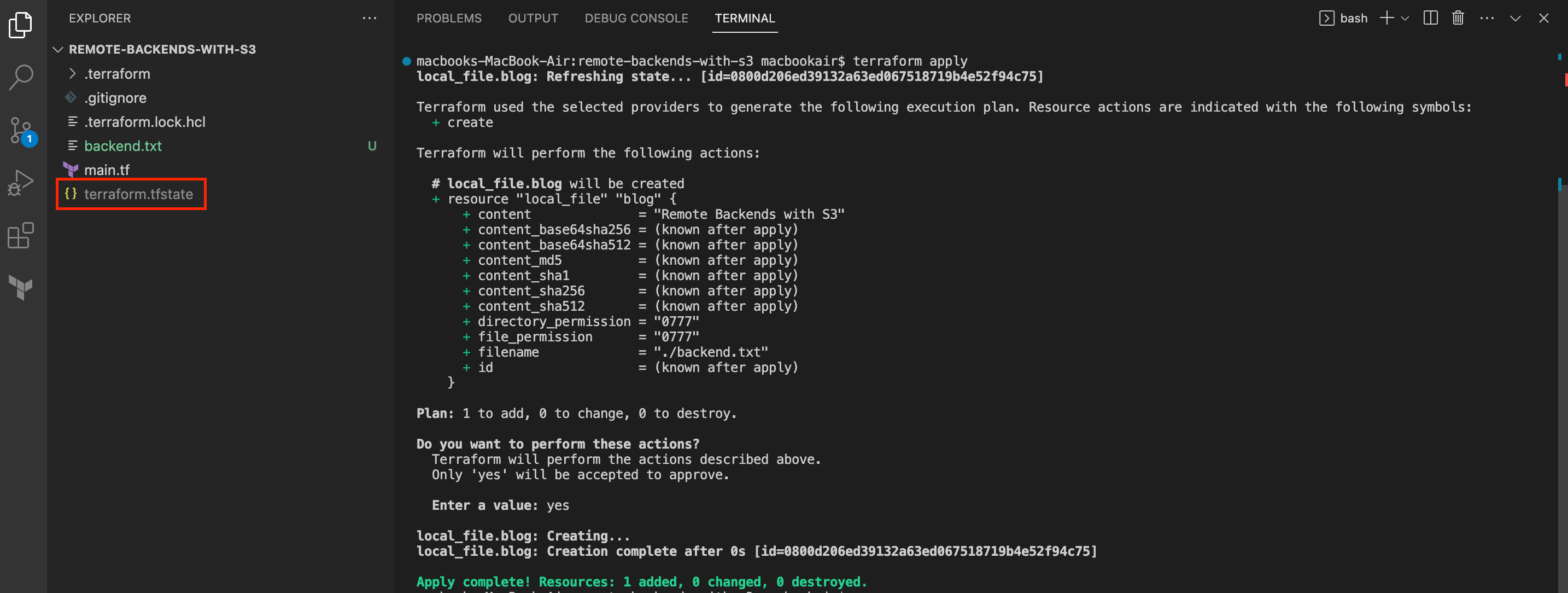

We have a configuration file in our directory that creates a local file resource, below is the code snippet:

resource "local_file" "blog" {

filename = "./backend.txt"

content = "Remote Backends with S3"

}main.tf configuration fileWhen we run terraform apply the resource is created as well as a local state file called terraform.tfstate in the configuration directory:

Step 2: Configure remote backend

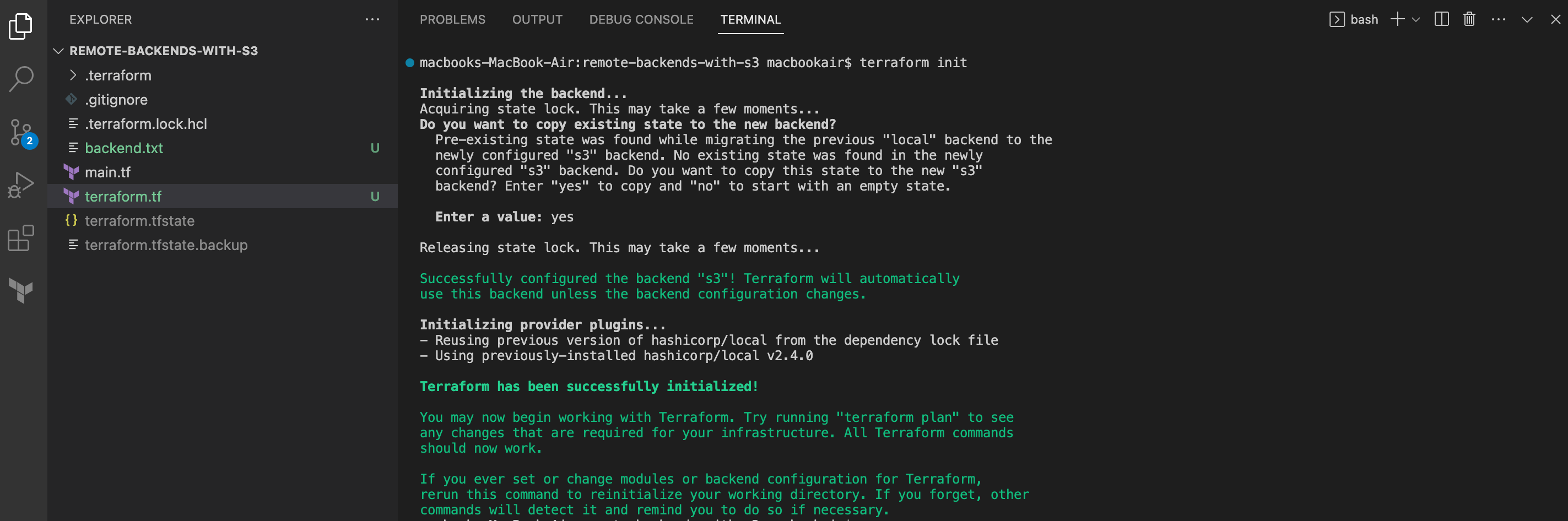

To configure a remote backend in Terraform to store our state file, we must define additional settings by making using of the terraform block in our configuration file:

terraform {

backend "s3" {

bucket = "thabo-remote-backend"

key = "terraform.tfstate"

region = "af-south-1"

dynamodb_table = "state-locking"

}

}terraform.tf configuration fileWithin the terraform block, we specify the backend configuration and provide values we recorded as part of the prerequisite section. To make use of this new backend, we need to reinitialize our infrastructure by running the terraform init command:

Step 3: Auto load and upload state file

Since have configured our remote backend, we can safely delete the local state file that was created in the previous sections by running the command below in the configuration directory:

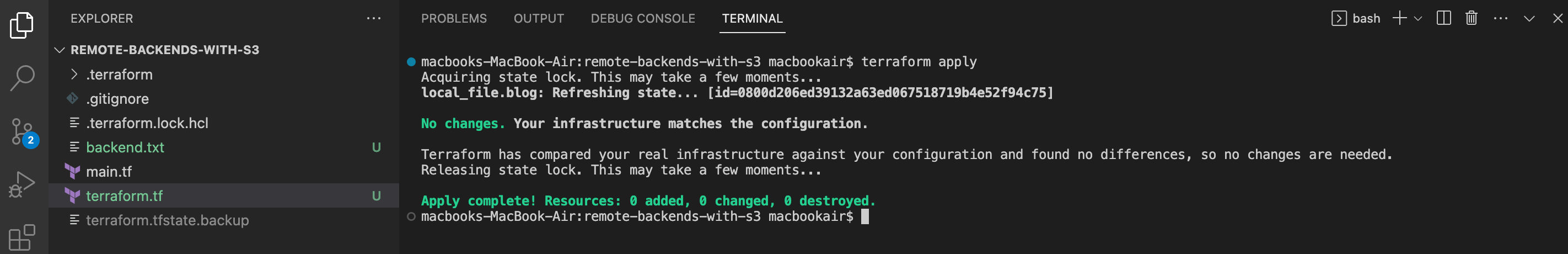

rm -rf terraform.tfstateNow running a terraform plan or terraform apply will lock the state file and pull it down from the S3 bucket into memory:

Any subsequent changes to the state will be uploaded to the remote backend automatically:

Once the operation is complete the lock will be released and the state file will not be stored in the local directory anymore:

Summary

It is a much better option to store Terraform state in a secure shared storage by making use of remote backends. The state file will no longer reside in the configuration directory or version control systems.